Return of the mark scheme

By Victoria Spalding

Published 17 Dec 2015

Objective testing assessment that uses multiple-choice questions, for example is relatively easy to mark. Answers are either correct or incorrect, and scores are simply tallied and set against a boundary. However, as a society we value a variety of skills such as writing, speaking or artistic expression. These sorts of constructed responses are complex to assess as they involve the exercise of examiner judgement.

Marking essay questions is particularly difficult. The range of possible answers is diverse, but we need to be confident that the mark scheme will allow a candidate the same mark by any given examiner. That means our mark schemes must give clear guidance and still be flexible enough to reward a vast selection of answers. Not an easy task.

Essay questions are often marked using 'level of response' mark schemes. These mark schemes describe the performance of different levels of ability and set descriptions are used to categorise students' responses into mark bands, known as levels. For exam questions worth a large percentage of marks known as high-tariff questions level-based marking has been shown to be more reliable than a points-based system, i.e. a scheme that rewards a mark for each relevant comment (Bramley, 2008).

In order to write a water-tight mark scheme, you need to be able to predict the responses for the questions. Crucially, you need to have a clear idea of the sort of responses you might get from candidates across a range of levels in order to write clear level descriptors:

'If credit is to be given for the quality of a response, [the mark scheme] needs to describe the features that should be used to discriminate between the various levels' (Ahmed & Pollitt, 2011)

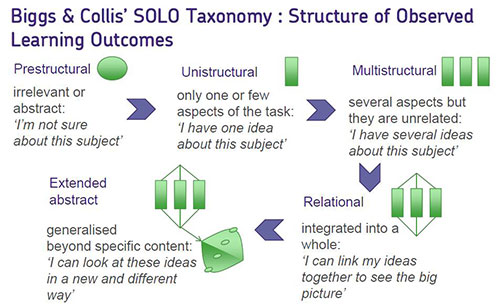

But how do you do that? Perhaps Han Solo can help or, more accurately, SOLO taxonomy, developed by Biggs & Collis. SOLO (Structure of Observed Learning Outcomes) taxonomy was developed to describe the different levels of learning students demonstrate. Below is a diagram that shows their model:

It may be helpful to use SOLO taxonomy when considering likely responses at varying levels.

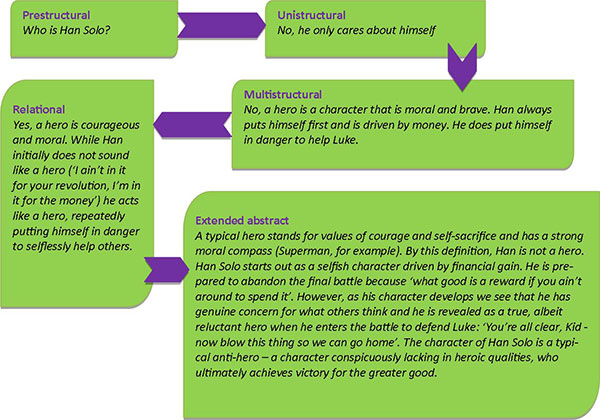

The diagram below shows how this model could apply to a Star Wars question:

Is Han Solo a hero?

Having used the SOLO taxonomy to help you consider the likely responses, you could then write a level descriptor that describes the sort of knowledge and skills you would expect to see at each level.

To ensure reliable judging, each level descriptor needs to be clear and distinct. To be clear, it needs to be obvious that it reflects the assessment objectives, so that anyone who reads it knows what qualities they are looking for. To be distinct, it needs to describe a level of performance that is different from the level above and below it. This task is considerably easier once you can envisage performance at each level.

Going back to our Star Wars question, the top-level descriptor (for the highest marks) could be: 'developed an informed argument that integrates knowledge with context of literature devices. Answers demonstrate breadth of knowledge and balance of ideas.' Contrast this with the bottom level descriptor, which might describe 'simple statements showing limited range of knowledge.'

By further defining performance in this way, we can mark extended prose fairly and reliably. So with this knowledge, go forth and write mark schemes and may the Force be with you.

Victoria Spalding

References

Ahmed, A., & Pollitt, A. (2011). Improving marking quality through a taxonomy of mark schemes. Assessment in Education: Principles, Policy & Practice, 18(3), 259 278. http://doi.org/10.1080/0969594X.2010.546775

Bramley, T. (2008, September). Mark scheme features associated with different levels of marker agreement. Paper presented at the British Educational Research Association (BERA) annual conference, Heriot-Watt University, Edinburgh.

Keywords

Related content

- The art of setting questions

- Who should mark our science exams?

- Does public confidence in qualifications actually matter?

About our blog

Discover more about the work our researchers are doing to help improve and develop our assessments, expertise and resources.

Share this page

Connect with us

Email: research@aqa.org.uk

Work with us to advance education and enable students and teachers to reach their potential.