Where’s the value in ‘value-added’?

By Christopher Wheadon

Published 17 Oct 2013

If my children went to the James McCune Smith Community School in New York (also known as New York Public School 200), I wouldn’t rest until I had secured a certain Mr Carlos Munoz as their teacher. The fact that I don’t live in New York, nor have any children, may cause my demands to lack punch but that certainly wouldn’t be the case for parents who do have children enrolled at this Manhattan elementary school.

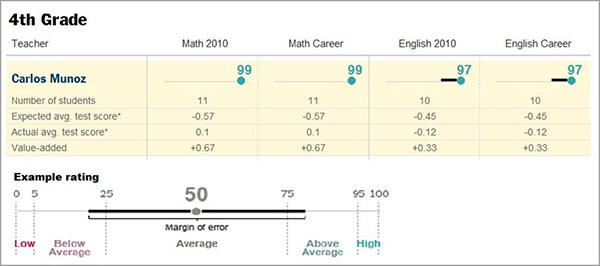

But why would I be so very keen that Mr Munoz teach my imaginary offspring? It’s all down to what’s revealed in this table:¹

It’s the ‘value-added’ that we’re interested in. Mr Munoz’s value-added, based on class size of 10 or 11, is in the 97th to the 99th percentile. The figure represents the extent to which his pupils outperformed the average test score, adjusted for past performance and the demographics of his students. Based on these figures, Mr Munoz’s students made excellent progress under his instruction and did considerably better than expected in their 4th grade tests.

Teacher of the year?

In England, the Department for Education is committed to value-added measures and open data, so there is no reason why in a year or two one wouldn’t be able to look up the value-added score of individual teachers in English schools. Will we soon be demanding Carlos Munoz hop across the Atlantic?

Before we buy Carlos a first class ticket to our local school, it may be worth pondering whether these data tell the whole story. In fact, maybe it should be high school teacher Stephen Lazar at Harvest Collegiate High School, also in New York, who should top our transfer list.

Stephen passionately pleads the case against value-added measures (Updated: My Blood, My Sweat, My Test Scores), maintaining that they don’t reflect a large part of what teaching is about. For example, they cannot tell you that he asked to teach students with special educational needs or who have English as a second language.

But hang on, aren’t value-added scores meant to take into account the background of the students being taught?

All that glitters…

Schools in England have been using value-added measures at the teacher level for years via systems such as those available at the CEM Centre at Durham University. The CEM Centre systems typically take a baseline test of students’ general ability, and then measure their subsequent academic achievement against this baseline.

Around the time that performance-related pay was last high on the agenda, two teachers – John Critchlow and Steve Rogers – realised that where teaching took place in classes set by ability, the teachers teaching the best sets always achieved the best value-added (Does your value-added depend on which set you teach?). In order to get a bonus, you would just need to teach the top set.

So why do value-added measures fail when children are split by ability?

Imagine giving a random group of children an IQ test, then being given first pick at selecting some of these children to form a football team. Assuming you had a good eye for ball skills, and paid no heed to their frankly irrelevant scores on the IQ test, you would assemble a decent team who would go on to do well at football. They would do especially well compared to others of a similar IQ, if you were to use this as your baseline measure.

The flaw in the methodology is obvious. When you choose the best mathematicians, public speakers or footballers on the basis of a reasonably good knowledge of their specialist ability, and you compare their progress to a measure of general ability, they will appear to achieve excellent progress.

This limitation of value-added extends to the whole-school level. Just as sets with the highest ability pupils are likely to achieve the best results, schools with selective intakes are likely to achieve the highest value-added. Research by the Sutton Trust found that the so-called ‘grammar school effect’ disappeared once they took into account the fact that pupils who entered grammar school were progressing faster than their peers before they entered the grammar schools. The grammar schools are the equivalent of the nation’s top sets. Theirs is a self-selected sample predisposed to prepare for and take entry tests and perform well, so they do much better than those of a similar general ability.

So, while value-added is a better measure of school performance than raw test results, it remains imperfect. And Stephen Lazar has a case. He has chosen the most challenging pupils and done his best to teach them. Their particular demographic will not and cannot be accurately measured by value-added.

So what now for my imaginary offspring? Well, perhaps along with my illustrious premiership footballing career, it would be best if they stayed firmly within my head, for the time being at least.

Chris Wheadon

¹ The data in the image was retrieved from http://www.schoolbook.org/school/236-ps-200-the-james-mccune-smith-school/teachers on February 14th 2013. It appears that SchoolBook no longer publishes teacher level data. The release of teacher level value-added was not planned, but followed requests under the Freedom of Information Law in 2010. Following a court ruling the Department of Education in New York was legally obligated to make the data publicly available.

Keywords

Related content

- Let’s stop talking about summer learning loss

- Does public confidence in qualifications actually matter?

- Does transparency equal trust?

About our blog

Discover more about the work our researchers are doing to help improve and develop our assessments, expertise and resources.

Share this page

Connect with us

Email: research@aqa.org.uk

Work with us to advance education and enable students and teachers to reach their potential.